Vera

Vera is an AI-powered privacy agent built on a foundation of responsible design principles, providing always-on guidance and operational assistance as an embedded extension of your team. Vera can be used by your team via a chat interface, or by taking select actions within the product that leverage Vera's capabilities.

Vera is built on the DataGrail platform, allowing it to uniquely deliver:

- Context-aware guidance: Combines industry-leading connectivity, an extensive metadata library, and privacy AI to make actionable recommendations specific to your DataGrail environment.

- Customer-specific insights: Get a comprehensive sense of risk across the entire platform, underscored by the Risk Register.

- Unrivaled security and trust: Built on a secure, isolated AI agent architecture to deliver zero external training exposure, 100% isolation, time-limited access, sensitive field redaction, and more.

Examples of Vera in Use

Here are some example prompts organized by what you're trying to accomplish.

| Category | Description | Example prompts |

|---|---|---|

| Understanding your data | Analytics, trends, summaries, risk awareness |

|

| Managing your workload | Task prioritization, items needing attention |

|

| Getting guidance | Onboarding, regulations, form completion, documentation |

|

| Monitoring operations | Integration health, system detection, activity tracking |

|

See Vera in action

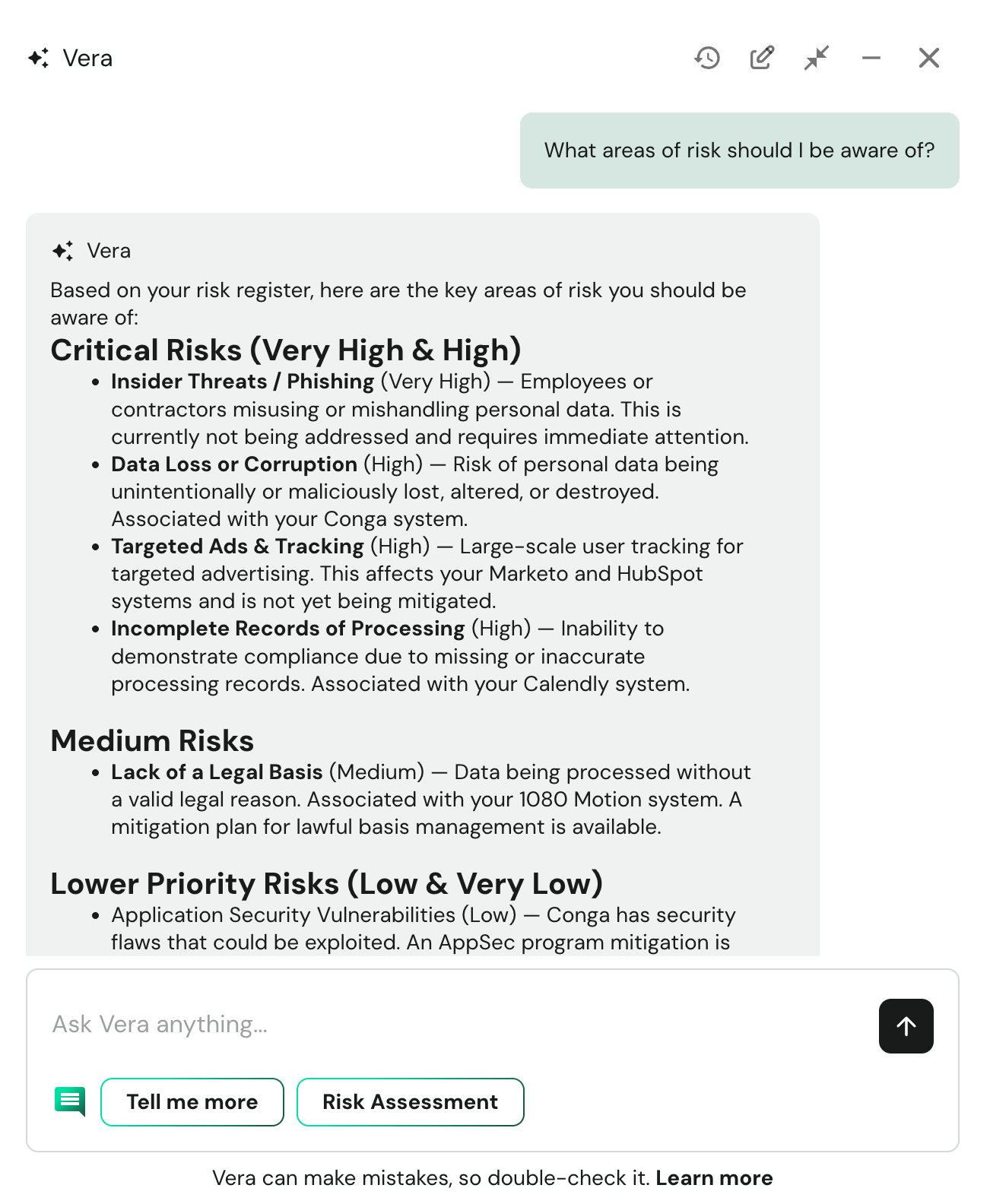

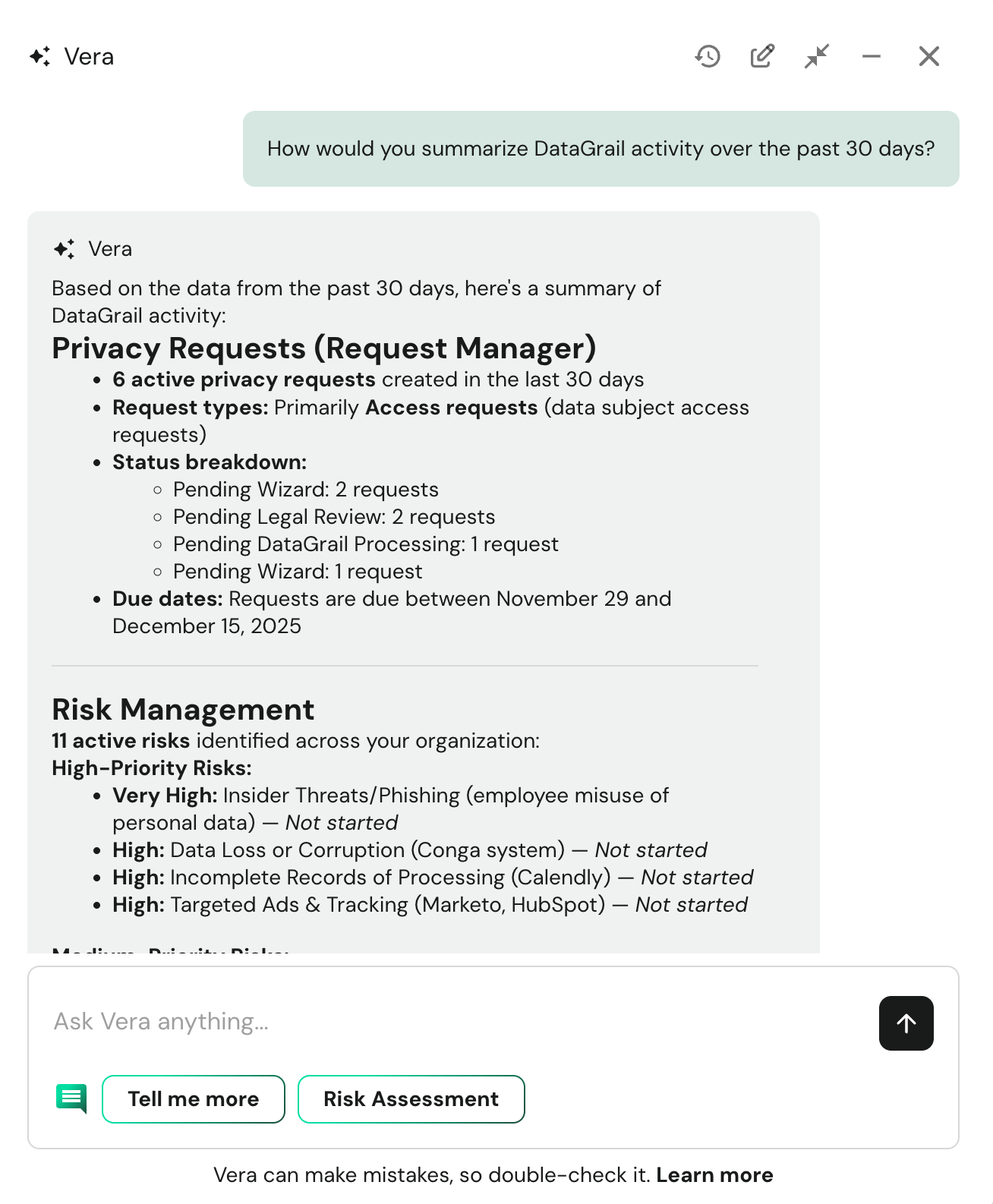

Prioritizing tasks - Vera can help you prioritize the tasks you need to work on, within each product or across the products as they relate to the risk they mitigate across your organization.

Summarizing activities - Vera can summarize the information you are currently looking at on a page to provide some context and unique reports specific to your needs.

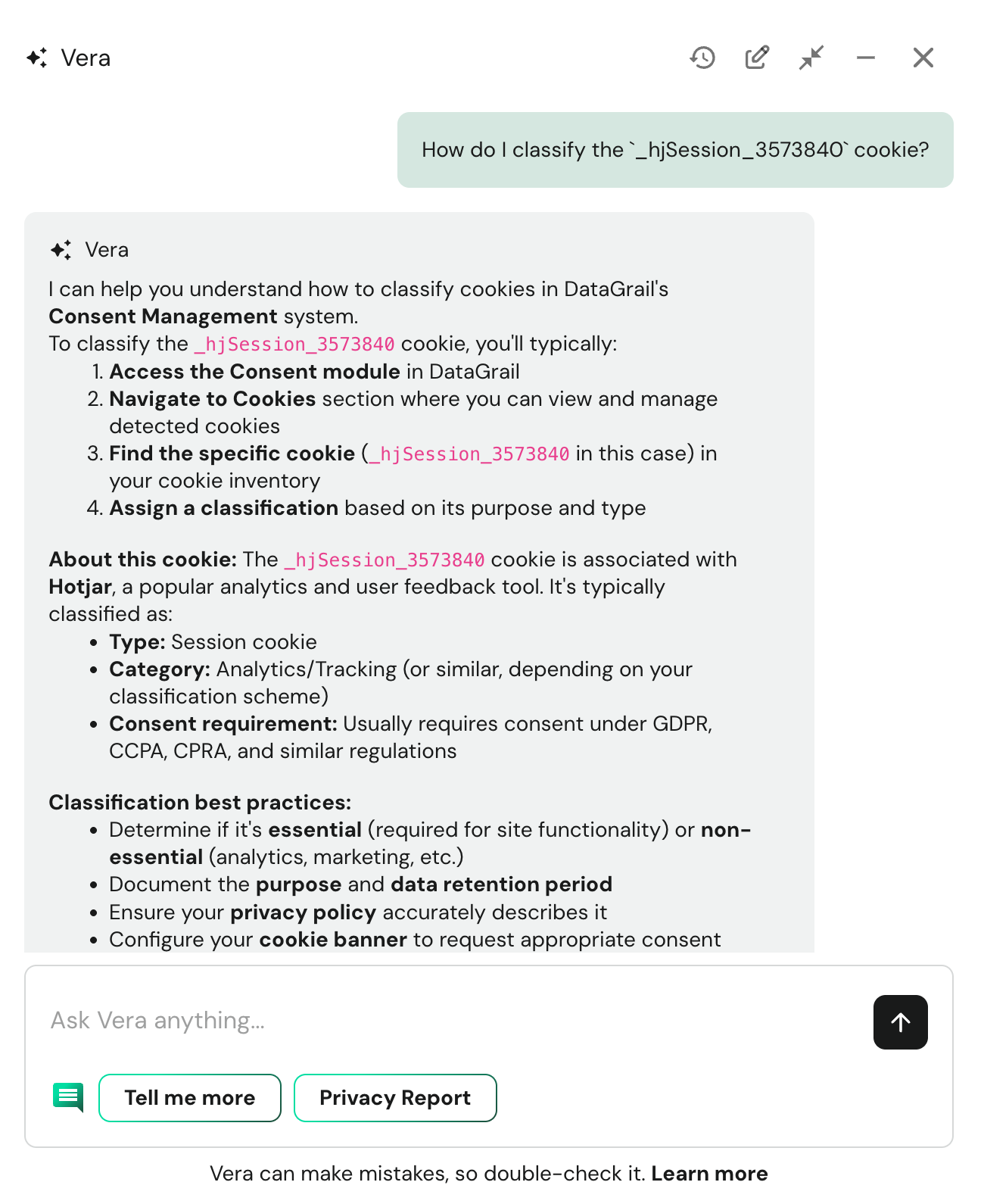

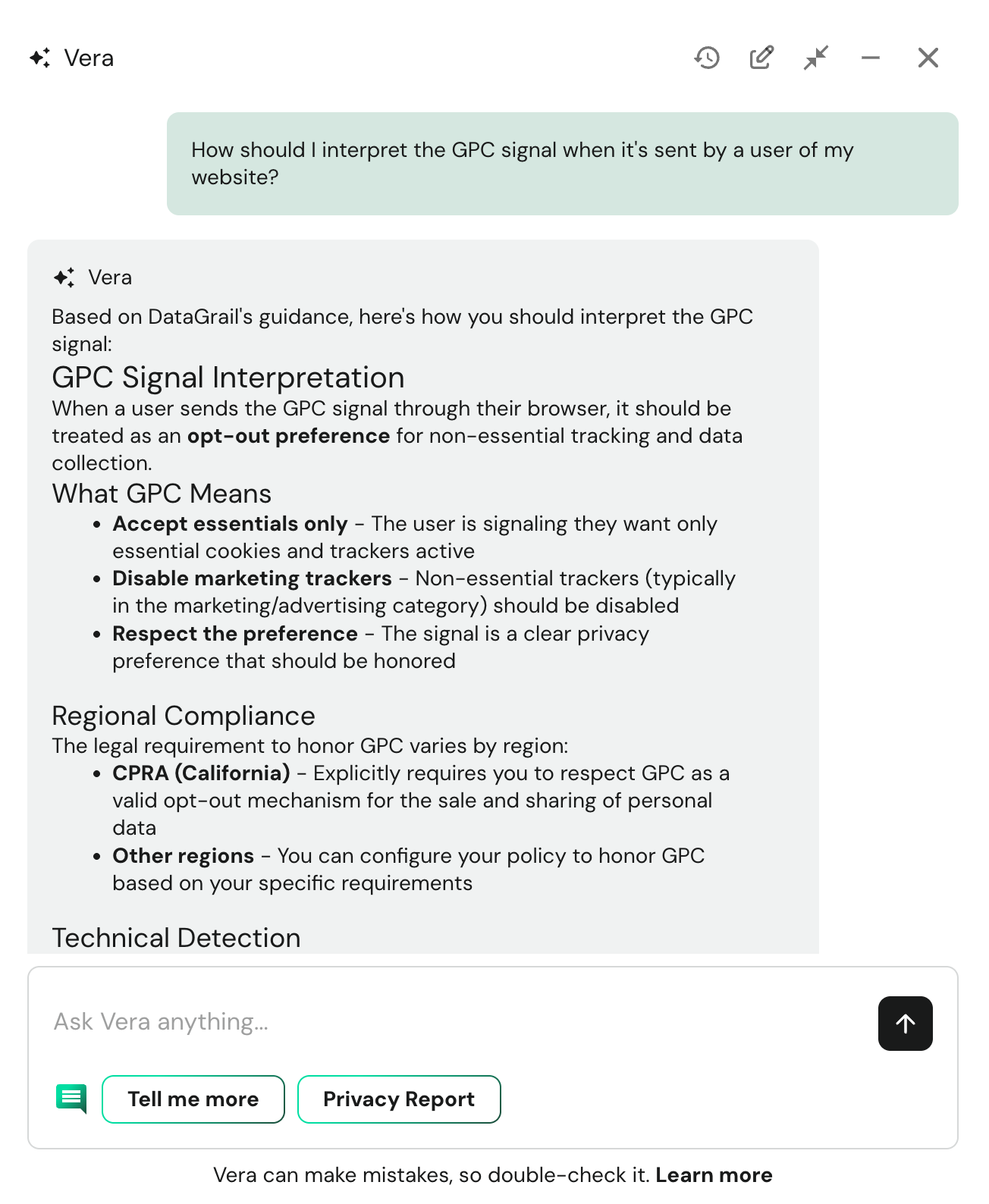

Suggesting updates - Vera can provide guidance on how to fill out an assessment question, define fields in a RoPA, categorize cookies, and more.

Regulation summaries - Vera can provide insights and overview into various legal frameworks, such as the CCPA and GDPR.

If you come up with more, please share them in the Privacy Basecamp!

Privacy & Security Standards

At DataGrail, trust is our most important asset. We understand that introducing AI capabilities requires a new level of transparency and a steadfast commitment to data security.

We designed Vera, our AI-powered privacy agent, with a security-first and privacy-by-design architecture. This document outlines the comprehensive measures we've taken to ensure your data remains secure, isolated, and under your control at all times.

Core Principles

Our entire architecture is built on these principles:

- Strict tenant isolation: Your data is never co-mingled with another customer's data. It is architecturally impossible for Vera to access, see, or report on any data outside of your own organization.

- Obeys your permissions: Vera inherits the exact same permissions as the user who is asking the question. If you don't have permission to see a piece of data in DataGrail, Vera won't be able to see it either.

- Separation of logic and data: Vera's "brain" (the AI model, Claude Haiku 4.5) is hosted in a completely separate environment (AWS Bedrock) from the core DataGrail database. The AI never has direct access to your data; instead, it accesses your data through a multi-layered security model (more below).

- No training on customer data: Your data is only used as context for the model to provide responses. This means they are read from a database and fed back into the model each time you ask a question.

- Full auditability: Every request Vera makes for data is fully logged in a detailed audit trail, providing complete transparency for compliance and review. We can provide logs of the conversations to you upon request.

Secure Data Access

We use a multi-layered security model to protect every question you ask. Here is a simplified step-by-step flow of how Vera processes a request securely.

- You submit a question: You submit a question into the Vera chat interface within the DataGrail platform.

- A secure, temporary token is created: Our system generates a unique, short-lived (5-minute) security token (a JWT). This token acts as a temporary "pass" that is tied specifically to you, your organization, and that single conversation.

- Vera receives and processes the query: Your question is then processed by industry leading LLMs hosted on AWS Bedrock. Today, we use Claude Haiku 4.5, but we may employ other models in the future if they provide you a better experience.

- Vera requests data from DataGrail: The AI agent cannot access your database directly. Instead, it must make a request back to a secure, dedicated DataGrail API endpoint which acts as a Model Context Protocol (MCP). It presents its temporary token as proof of identity.

- DataGrail verifies everything: This is the most critical step. Before any data is released, our MCP gateway runs a multi-layer security check:

- Token validation: Is the token authentic and unexpired?

- Tenant check: Does the customer ID in the token match your organization?

- User check: Does the user ID in the token match your user account?

- Permission check: Does your user role (e.g., Admin, Request Agent) have permission to view the data being requested?

- Secure, scoped data retrieval: Only after all checks pass does DataGrail retrieve the data. The database query itself is also automatically filtered to ensure it only runs against your organization's data.

- Vera synthesizes and displays the answer: The AI agent receives only the specific, authorized data it requested. It then uses this data to formulate a natural language answer and sends it back to you. The temporary token is not used again for this query.

This entire process, from question to answer, happens in seconds, with multiple security checkpoints enforced along the way.

Slack Integration

Vera is also available directly in Slack, allowing your team to get privacy guidance and assistance without leaving their primary communication tool. By connecting your Slack workspace to DataGrail, authorized users can have conversations with Vera through direct messages.

Connecting Your Slack Workspace

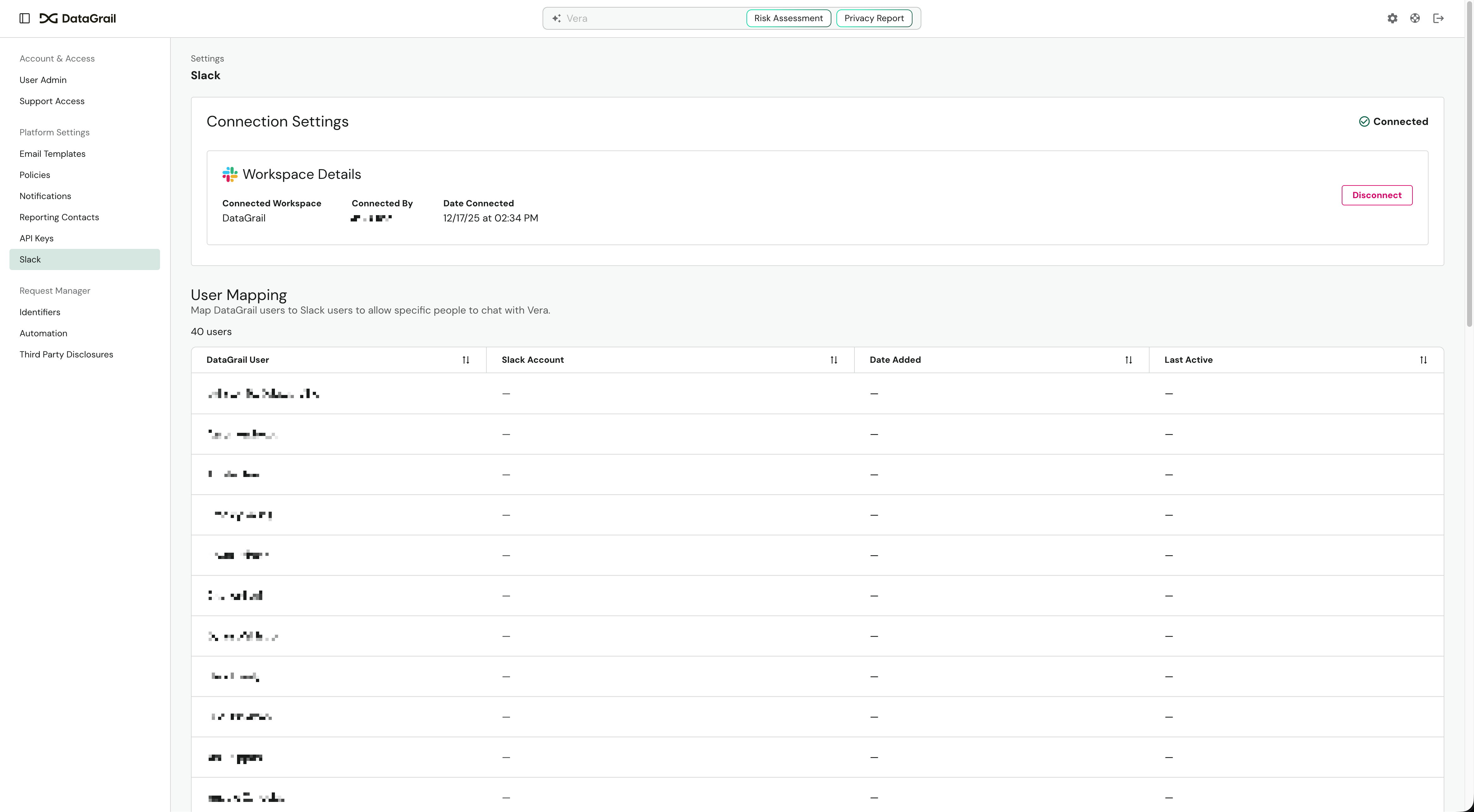

To enable Vera in Slack, an administrator must first connect your organization's Slack workspace to DataGrail.

- Navigate to Settings > Slack in your DataGrail dashboard.

- Click the Connect Slack Workspace button.

- You will be redirected to Slack to authorize the DataGrail Vera app.

- Review the requested permissions and click Allow.

- Once authorized, you will be redirected back to DataGrail with a confirmation that the connection was successful.

After connecting, you'll see your workspace details including the workspace name, who connected it, and when.

User Mapping

For security and access control, only users who are explicitly mapped can chat with Vera in Slack. This ensures that only authorized team members can access privacy guidance through the Slack integration.

To manage user access:

- Navigate to Settings > Slack in your DataGrail dashboard.

- In the User Mapping section, you'll see a list of DataGrail users.

- For each user you want to grant Slack access, select their corresponding Slack account from the dropdown (we search by email).

- Save the mapping.

The User Mapping table displays:

- DataGrail User: The user's name or email in DataGrail

- Slack Account: The linked Slack user (if mapped)

- Date Added: When the mapping was created

- Last Active: When the user last interacted with Vera in Slack

Users who attempt to message Vera without being mapped will receive a message directing them to contact their DataGrail administrator for access.

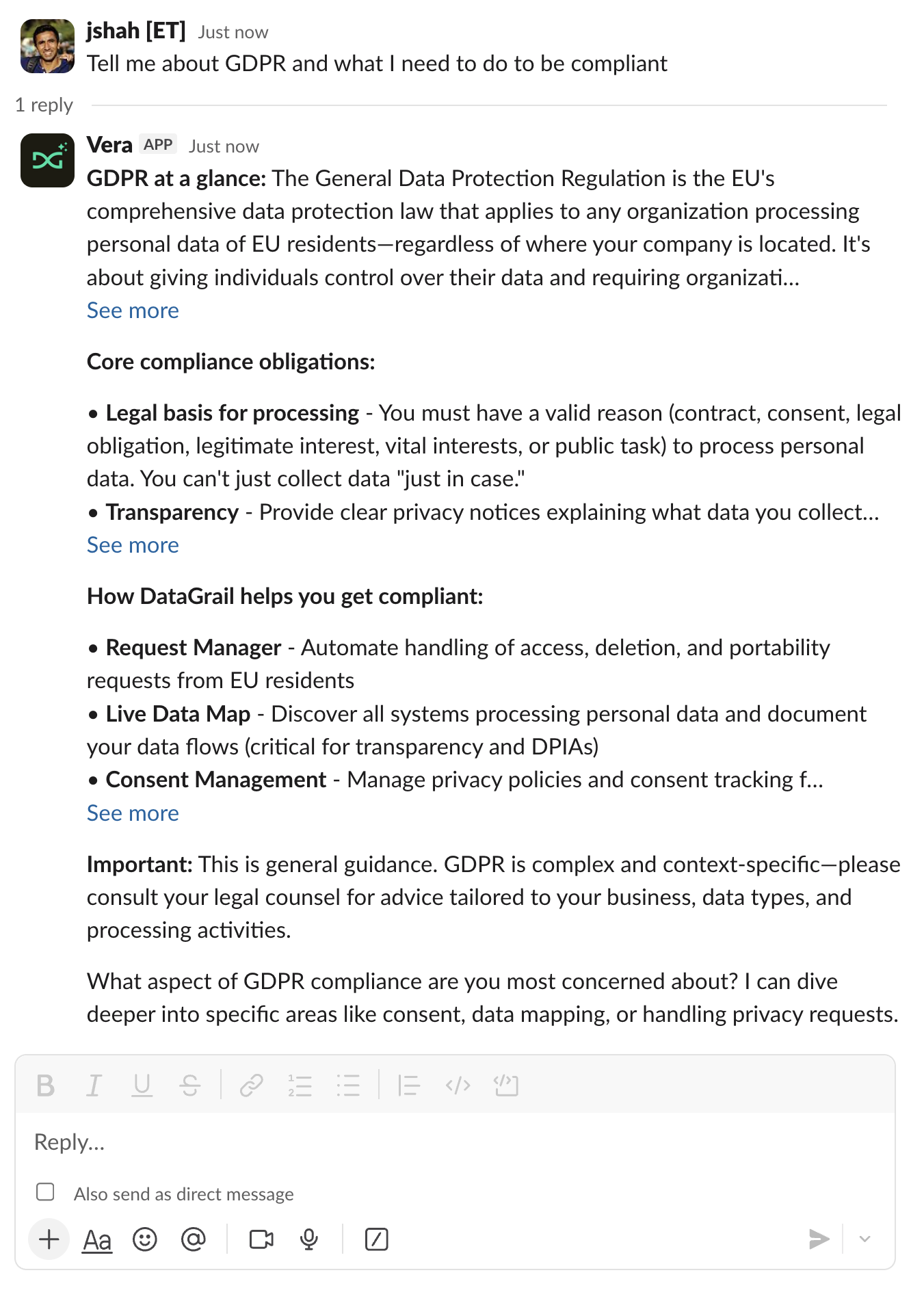

Chatting with Vera in Slack

Once your workspace is connected and your user account is mapped, you can start chatting with Vera directly in Slack.

- Open Slack and find the Vera bot in your direct messages.

- Send a message with your privacy question or request.

- Vera will respond in the same thread with guidance tailored to your DataGrail environment.

Vera in Slack has the same capabilities as Vera in the DataGrail web interface:

- Answer privacy and compliance questions

- Provide guidance on regulations like CCPA and GDPR

- Help prioritize tasks across DataGrail products

- Summarize information from your DataGrail environment

Conversations in Slack maintain context within a thread, so you can have multi-turn conversations just like in the web interface.

Vera in Slack inherits the same permissions as your DataGrail user account. If you don't have permission to view certain data in DataGrail, Vera won't be able to access it when responding to your Slack messages.

Disconnecting Your Workspace

If you need to disconnect your Slack workspace from DataGrail:

- Navigate to Settings > Slack in your DataGrail dashboard.

- Click the Disconnect button in the Workspace Details section.

- Confirm the disconnection when prompted.

Disconnecting will immediately revoke Vera's access to your Slack workspace. All user mappings will be preserved, so if you reconnect later, you won't need to reconfigure them.

Providing Feedback

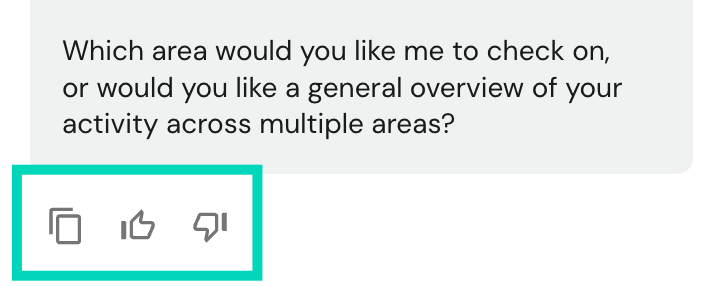

Generative AI models can make mistakes in their responses, misinterpret inputs, or veer off course in conversations. While we continue to take steps in reducing these issues, they may pop up from time to time.

If you see a response that doesn't meet your expectations, please use the feedback buttons to let us know when Vera misses the mark:

This input helps us refine Vera's responses and guide how it uses the context it receives from your messages.

Periodically, we will request more detailed feedback on inaccurate responses; please specify what you expected and what Vera returned.

Disclaimer: The information contained in this message does not constitute as legal advice. We would advise seeking professional counsel before acting on or interpreting any material.